In one of the projects that I worked on, I have two tasks/jobs on Windows Task Scheduler, let say the name of the first job is “FirstJob” and the second job is “SecondJob”. I have a requirement where the “SecondJob” should run immediately after the “FirstJob” has been completed successfully. There are several ways to achieve this. For this particular project, to fulfil the requirement, on the “SecondJob”, I would trigger the “SecondJob” based “On an event”, which the the successful completion of the “FirstJob”.

Monday, March 4, 2019

Friday, January 4, 2019

Executing xp_cmdshell with Non SysAdmin Account

In SQL Server, xp_cmdshell is an extended stored procedure that is stored in the master database that would allow us to execute Windows command shell. By default, the ability to execute xp_cmdshell is disabled. We could enable the ability to run xp_cmdshell by executing the following query against the SQL Server instance:

Wednesday, February 21, 2018

Windows 10 – Mobile Hotspot

A few weeks ago, I was doing a demo on a couple of Alexa skills that I've developed using Amazon Echo Dot. However, the place where I have to do the demo use enterprise class Wi-Fi (it is where after connecting to the Wi-Fi network, we need to authenticate using web browser). Unfortunately, at the moment, as far as I know, Amazon Echo Dot doesn't support enterprise class Wi-Fi. To make matter worse, my cell phone signal was very weak in the meeting room, so I was unable to use my cell phone as hotspot.

Tuesday, December 19, 2017

PASS Summit Video Recordings

PASS (Professional Association for SQL Server) Summit is the largest conference for data professional working with Microsoft SQL Server and Business Intelligence stack. Typically, PASS would have the Summit once a year (in the fall) in Seattle, Washington. PASS Summit offers a wealth of knowledge. Each year, there are hundreds of sessions on various SQL Server and Business Intelligence topics presented by industry experts and also Microsoft employees. If you are a data professional, who are working with Microsoft SQL Server and Business Intelligence, PASS Summit is a great event to learn and network with fellow data professionals. If you were unable to attend the past PASS Summits (or if you attended the past PASS Summits and want to relive and review those sessions), you can go to SQL PASS site and watch the past PASS Summit Session Recordings for free. As of the writing of this blog post, you can view recordings for PASS Summit 2015 and older for free (you need to be a member of SQL PASS but the registration is free). Even though they might be a few years old, they are excellent learning resources.

By the way, if you can attend PASS Summit in person, I would recommend it. PASS Summit 2018 is going to be in Seattle, Washington, in November 2018. You can get more information from PASS Summit 2018 site.

Wednesday, July 22, 2015

Remove Log Shipping When Secondary Server Not Available

The other day, I came accross a scenario where I need to remove log shipping from a database on the primary server. However, we have already shutdown/decommissioned the secondary server. If we try to disable the log shipping from the database properties window, we would most like get the following:

Monday, July 20, 2015

Reflection on Steve Stedman's Database Corruption Challenge

Over the past few months, Steve Stedman (blog | twitter) has been conducting database corruption challenge. It is a challenge where Steve would post corrupt SQL Server databases and the participants would try to fix the corruption to the best of their ability. The participants would need to avoid any data loss when trying to fix the database corruption.

Friday, May 15, 2015

Change Data Capture (CDC) and Simple Recovery Mode

One of the misconceptions that some people have about change data capture (CDC) in SQL Server is that it needs the database to be set up with full recovery mode. If we look at the following TechNet article, it mentioned:

It should be noted that change data capture works with all recovery models. But when change data capture is enabled, operations that would normally be minimally logged in simple or bulk-logged recovery models are fully logged to enable change data capture to capture all changes.

Tuesday, May 5, 2015

SQL Script: Database Auto Grow and/or Auto Shrink Report

Below is a SQL script that can be used to list recent auto grow and/or auto shrink events that happened on your SQL Server. It queries the SQL Server default trace. By default, default trace is enabled (you can check this by running: sp_configure 'default trace enabled').

Wednesday, April 29, 2015

PowerShell Script: List SQL Server Analysis Service Role Permission By Database and Cube

Wednesday, April 22, 2015

PowerShell Script: Adding Database into Always On Group

Every once in a while, I have a new database that I need to put into an existing Always On group. So I have decided to create a PowerShell script that would do just that. The script can be found here.

Tuesday, April 14, 2015

Stale View

If you have non-schema bound view in SQL Server and the underlying table was updated, you potentially can have a stale view. A quick way to demonstrate this:

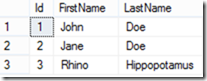

Let say that you have the following table:

CREATE TABLE dbo.Contact (

Id INT IDENTITY(1,1) PRIMARY KEY,

FirstName VARCHAR(10),

LastName VARCHAR(10));

GO

INSERT INTO dbo.Contact VALUES ('John', 'Doe');

INSERT INTO dbo.Contact VALUES ('Jane', 'Doe');

Then, create a simple view against the newly created table:

CREATE VIEW dbo.vwContact

AS

SELECT *

FROM dbo.Contact

GO

If we run the following query:

SELECT Id, FirstName, LastName

FROM dbo.vwContact

We would get the expected result:

Now, what happen if we add a new column to the Contact table:

ALTER TABLE dbo.Contact

ADD Country VARCHAR(10) NULL;

GO

UPDATE dbo.Contact

SET Country = 'USA'

WHERE Id = 1;

If we run the following query against the vwContact view:

SELECT Id, FirstName, LastName, Country

FROM dbo.vwContact;

We would get the following error message:

Msg 207, Level 16, State 1, Line 28

Invalid column name 'Country'.

That’s odd, it looks like the view does not recognize the newly added Country column.

Ok, let say that your company got a new contact, his name is Mr. Rhino Hippopotamus. Obviously, you will need to have a bigger last name column. So you decide to change the LastName column from varchar(10) to varchar(20).

ALTER TABLE dbo.Contact

ALTER COLUMN LastName VARCHAR(20);

GO

INSERT INTO dbo.Contact VALUES ('Rhino', 'Hippopotamus', 'USA');

GO

If you try to query the view using the following query (let’s forget about the Country column for now):

SELECT Id, FirstName, LastName

FROM dbo.vwContact;

That seems to work just fine. You get the expected result:

But, what happen if you try to run the same query from another server, using a linked server:

SELECT Id, FirstName, LastName

FROM ServerA.TestDB.dbo.vwContact;

Instead of getting the expected result, we got the following error:

Msg 7347, Level 16, State 1, Line 1

OLE DB provider 'SQLNCLI11' for linked server 'HSQL01' returned data that does not match expected data length for column '[ServerA].[TestDB].[dbo].[vwContact].LastName'. The (maximum) expected data length is 10, while the returned data length is 12.

If we check on the column property for the LastName column of vwContact view from the server that has the linked server to the original server, using the following query, we would notice that somehow, the LastName column is still listed as having 10 characters long:

USE [master];

GO

EXEC sp_columns_ex 'ServerA', 'vwContact', 'dbo', 'TestDB', 'LastName', NULL;

Solution

So how to address the issue that we are having with stale view. There are several ways:

We could drop and recreate the view. In the above example, we can run the following query:

IF (OBJECT_ID('dbo.vwContact') IS NOT NULL)

DROP VIEW dbo.vwContact

GO

CREATE VIEW dbo.vwContact

AS

SELECT *

FROM dbo.Contact

GOWe could also run an alter view statement:

ALTER VIEW dbo.vwContact

AS

SELECT *

FROM dbo.Contact

GO

Alternatively, we could refresh the view using the following script:

EXEC sp_refreshview 'dbo.vwContact';

Tuesday, March 24, 2015

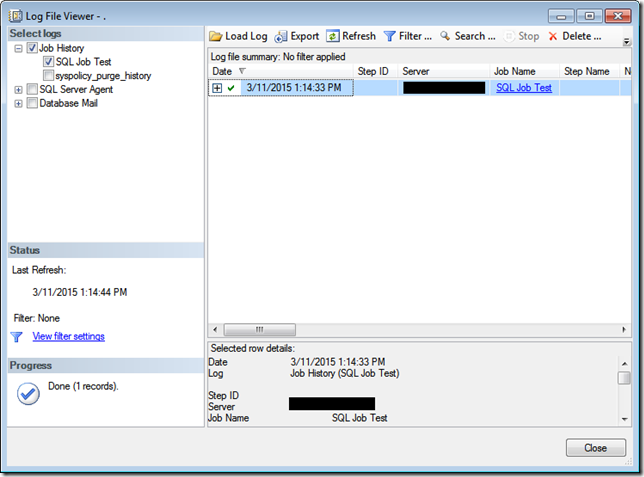

SQL Server Agent Job Last Run Time and Result

I've seen situations in which we have some SQL Server Agent jobs that are scheduled to run very rarely (like once every year or once every half year). Either because of audit or troubleshooting, we need to find out those SQL Server Agent jobs last run time and their outcome. However, the SQL Server Agent history is being purged every few months (Notes: purging SQL Server Agent history periodically is not a bad thing, it is actually a good practice to keep the msdb database size manageable, esp. if your environment has lots of SQL Server Agent jobs, in which many of them have lots of job steps, and some of the jobs are scheduled to run frequently - every few minutes or even seconds).

Are we out of luck? Well not necessarily.

Demo

First create a SQL Agent job with the following script:

USE [msdb]

GO

BEGIN TRANSACTION

DECLARE @ReturnCode INT

SELECT @ReturnCode = 0

IF NOT EXISTS (SELECT name FROM msdb.dbo.syscategories WHERE name=N'[Uncategorized (Local)]' AND category_class=1)

BEGIN

EXEC @ReturnCode = msdb.dbo.sp_add_category @class=N'JOB', @type=N'LOCAL', @name=N'[Uncategorized (Local)]'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

END

DECLARE @jobId BINARY(16)

EXEC @ReturnCode = msdb.dbo.sp_add_job @job_name=N'SQL Job Test',

@enabled=1,

@notify_level_eventlog=0,

@notify_level_email=0,

@notify_level_netsend=0,

@notify_level_page=0,

@delete_level=0,

@description=N'No description available.',

@category_name=N'[Uncategorized (Local)]',

@owner_login_name=N'sa', @job_id = @jobId OUTPUT

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobstep @job_id=@jobId, @step_name=N'Job Step 1',

@step_id=1,

@cmdexec_success_code=0,

@on_success_action=3,

@on_success_step_id=0,

@on_fail_action=2,

@on_fail_step_id=0,

@retry_attempts=0,

@retry_interval=0,

@os_run_priority=0, @subsystem=N'TSQL',

@command=N'select 1',

@database_name=N'master',

@flags=0

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobstep @job_id=@jobId, @step_name=N'Job Step 2',

@step_id=2,

@cmdexec_success_code=0,

@on_success_action=1,

@on_success_step_id=0,

@on_fail_action=2,

@on_fail_step_id=0,

@retry_attempts=0,

@retry_interval=0,

@os_run_priority=0, @subsystem=N'TSQL',

@command=N'select 2',

@database_name=N'master',

@flags=0

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_update_job @job_id = @jobId, @start_step_id = 1

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobserver @job_id = @jobId, @server_name = N'(local)'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

COMMIT TRANSACTION

GOTO EndSave

QuitWithRollback:

IF (@@TRANCOUNT > 0) ROLLBACK TRANSACTION

EndSave:

GO

Then we run the job using the following script:

USE [msdb];

GO

EXEC sp_start_job @job_name=N'SQL Job Test';

GO

Once that has been completed, there should be a job history for the SQL Server agent job:

Now purge the job history by running the following:

USE [msdb];

GO

EXEC dbo.sp_purge_jobhistory @job_name=N'SQL Job Test';

GO

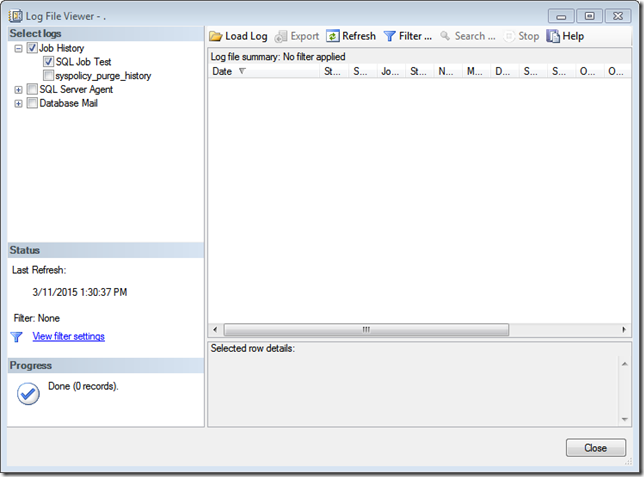

After purging the job history, we will no longer have the entries for “SQL Job Test” job on dbo.sysjobhistory table in the msdb database.

If we try to query the sysjobhistory, using the following query, we would get 0 row:

USE [msdb];

GO

SELECT *

FROM dbo.sysjobhistory

WHERE job_id IN (

SELECT job_id

FROM dbo.sysjobs

WHERE [name] = N'SQL Job Test');

Or this query:

USE [msdb];

GO

EXEC sp_help_jobhistory @job_name = N'SQL Job Test';

GO

Gulp! No more history for SQL job “SQL Job Test”. But wait, if we look at the job properties, we can see when the job was last executed.

So the information must still be in the database. If we try to run the following query:

USE [msdb];

GO

EXEC sp_help_job @job_name = N'SQL Job Test';

We can see the job’s last run date, time and even outcome (For the definition of last_run_outcome column, you can check the following MSDN article). There’s more. When you run the sp_help_job stored procedure, you can also see each of the job step’s last run date, time and outcome.

If we investigate it further, the last run date, time and outcome are stored on sysjobservers and sysjobsteps within the msdb database. We can query them using the following:

USE [msdb];

GO

SELECT j.job_id,

j.Name,

js.last_run_outcome,

last_run_outcome_desc = CASE

WHEN js.last_run_outcome = 0

THEN 'Failed'

WHEN js.last_run_outcome = 1

THEN 'Succeeded'

WHEN js.last_run_outcome = 3

THEN 'Canceled'

ELSE 'Unknown'

END,

js.last_outcome_message,

last_run_datetime = dbo.agent_datetime(

CASE WHEN js.last_run_date = 0 THEN NULL ELSE js.last_run_date END,

CASE WHEN js.last_run_time = 0 THEN NULL ELSE js.last_run_time END),

js.last_run_duration

FROM dbo.sysjobs j

INNER JOIN dbo.sysjobservers js

ON j.job_id = js.job_id

Or if you want to see it for each of the job’s steps:

USE [msdb];

GO

SELECT j.job_id,

j.Name,

js.last_run_outcome,

last_run_outcome_desc = CASE

WHEN js.last_run_outcome = 0

THEN 'Failed'

WHEN js.last_run_outcome = 1

THEN 'Succeeded'

WHEN js.last_run_outcome = 3

THEN 'Canceled'

ELSE 'Unknown'

END,

js.last_outcome_message,

last_run_datetime = dbo.agent_datetime(

CASE WHEN js.last_run_date = 0 THEN NULL ELSE js.last_run_date END,

CASE WHEN js.last_run_time = 0 THEN NULL ELSE js.last_run_time END),

js.last_run_duration,

jst.step_id,

jst.step_name,

step_last_run_outcome = jst.last_run_outcome,

step_last_run_outcome_desc = CASE

WHEN jst.last_run_outcome = 0

THEN 'Failed'

WHEN jst.last_run_outcome = 1

THEN 'Succeeded'

WHEN jst.last_run_outcome = 2

THEN 'Retries'

WHEN jst.last_run_outcome = 3

THEN 'Canceled'

ELSE 'Unknown'

END,

step_last_run_datetime = dbo.agent_datetime(

CASE WHEN jst.last_run_date = 0 THEN NULL ELSE jst.last_run_date END,

CASE WHEN jst.last_run_time = 0 THEN NULL ELSE jst.last_run_time END)

FROM dbo.sysjobs j

INNER JOIN dbo.sysjobservers js

ON j.job_id = js.job_id

INNER JOIN dbo.sysjobsteps jst

ON j.job_id = jst.job_id

WHERE j.name = N'SQL Job Test';

Clean-up

To clean up the demo, you can run the following script:

USE [msdb];

GO

EXEC sp_delete_job @job_name = N'SQL Job Test';

GO

File Share Witness

If you have cluster environment and using the file share witness, you can use the following command line (using cluster.exe) to get the location of your file share witness:

cluster.exe res "FileShare Witness" /priv

Or if you prefer to use PowerShell, you can use:

Get-ClusterResource "File Share Witness" | Get-ClusterParameter | fl

Sunday, March 16, 2014

Microsoft Access–Reseed Auto Number

I was developing a small database using Microsoft Access 2010. In that database, I use Auto Number on some of the tables. During the development of the database, I’ve entered a lot of test data into those tables that have auto number.

Then it comes the time to clean up the test data, so that the database can be used for the live data. Deleting the data from the tables is pretty straightforward. However, when I delete the data it does not reset any of the auto number columns that I have. In SQL Server, we could use the “Truncate” command to delete the data in the table and reset the identity column (the auto number) in the table. Or use DBCC CHECKIDENT to reseed the identity column. However, we could not use those in Microsoft Access.

So to reseed auto number in Microsoft Access, you can do the following:

- Delete the data from the table

- Run the “Compact and Repair Database Tools”. It can be found under the “Database Tools” on Microsoft Access ribbon menu.

Monday, February 10, 2014

High Virtual Log Files (VLF) Count

Virtual log files are basically segments of the database log file. Every time the transaction log grow in size, the number of virtual log files would also grow. If you have a database with transaction log that keep growing in small increment each time, you can end up with a lot of virtual log files, and that can be a bad thing.

In SQL Server 2012, if you have a database with more than 10,000 virtual log file, you will get a warning message on the SQL Server logs. To demonstrate this:

First I would create a database called VLFTest. I set the log file for the database to have an initial size of 512 KB (for this size 2 VLF will be created).

USE [master];

IF DB_ID('VLFTest') IS NOT NULL

BEGIN

DROP DATABASE VLFTest;

END;

GO

CREATE DATABASE VLFTest

ON (

NAME = 'VLFTest_Data',

FILENAME = 'C:\Program Files\Microsoft SQL Server\MSSQL11.MSSQLSERVER\MSSQL\DATA\VLFTest_Data.mdf')

LOG ON (

NAME = 'VLFTest_Log',

FILENAME = 'C:\Program Files\Microsoft SQL Server\MSSQL11.MSSQLSERVER\MSSQL\DATA\VLFTest_Log.ldf',

SIZE = 512 KB,

MAXSIZE = 10240 MB,

FILEGROWTH = 512 KB);

GO

Once the database is created, I can check the number of VLF created by running the following query:

DBCC LOGINFO ('VLFTest');The query should return 2 rows, which would indicate that there are two VLFs created.

The next thing I would do is to grow the transaction log size by 512 KB increment for 5,000 times. Since each time we increment the transaction log by 512 KB, 2 new VLFs would be created. Thus, doing it for 5,000 times would cause it to generate 10,000 new VLFs. So at the end I would end up with 10,002 VLFs. I can do this by using the following script:

DECLARE @CurrentSize AS INT,

@LogFileQuery AS NVARCHAR(1000);

--- Get current log file size.

PRINT 'Current log file size is ' + CAST(@CurrentSize AS VARCHAR(1000)) + ' KB';

SELECT @CurrentSize = size*8 FROM sys.master_files WHERE database_id = DB_ID('VLFTest') AND name = 'VLFTest_Log'

--- Try to grow the transaction log by 512 KB. This should result in two additional VLF.

PRINT 'Growing the transaction log to ' + CAST((@CurrentSize + 1) AS VARCHAR(1000)) + ' KB';

SET @LogFileQuery = 'ALTER DATABASE VLFTest MODIFY FILE (NAME = VLFTest_Log, SIZE = ' + CAST((@CurrentSize + 512) AS VARCHAR(1000)) + ' KB);';

EXEC (@LogFileQuery);

GO 5000

Now if I run the following query:

DBCC LOGINFO('VLFTest');I would get back 10,002 rows back, which means that I have 10,002 VLFs on VLFTest database’s transaction log.

Now, the warning in SQL Server log unfortunately will not be trigger when the VLF count goes beyond 10,000. I found out that it will be trigger under the following conditions:

- When the SQL Server is restarted

- When the VLF database is set online (after it is set offline)

- When we restore the database

So for a quick test, I try to set the database offline and then back online by using the following query:

USE master;

GO

ALTER DATABASE VLFTest SET OFFLINE;

GO

ALTER DATABASE VLFTest SET ONLINE;

GO

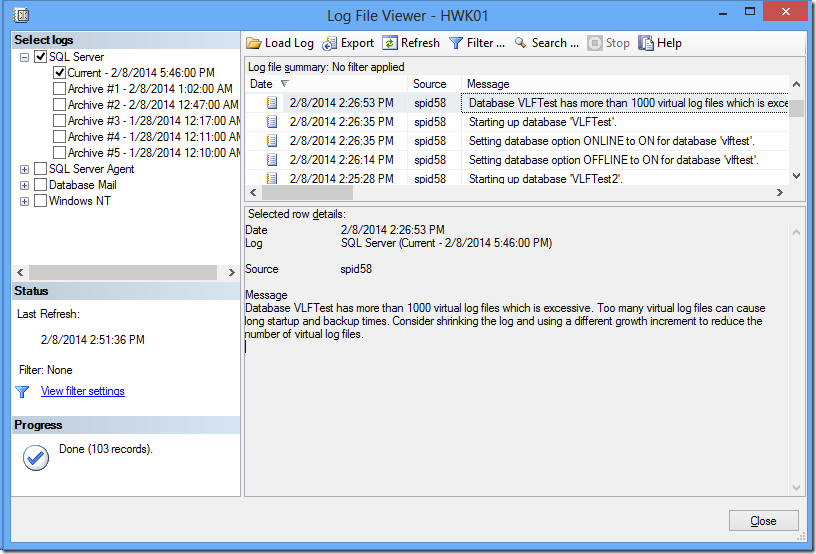

After VLFTest database is back online, when I go to SQL Server log, I would get the following message:

Database VLFTest has more than 1000 virtual log files which is excessive. Too many virtual log files can cause long startup and backup times. Consider shrinking the log and using a different growth increment to reduce the number of virtual log files.

Why Having Too Much of VLFs Count Can Be Bad Thing?

Having an excessive VLFs count can be a bad thing to your database. For one, when I tried to make the database back online after turning it offline, it took longer than expected. When I run the following query:

USE master;

ALTER DATABASE VLFTest SET OFFLINE;

GO

SET STATISTICS TIME ON;

ALTER DATABASE VLFTest SET ONLINE;

GO

After running it 5 times, on average it took about 23 seconds for the database to be online. Now compare this with the following:

USE master;

GO

IF DB_ID('VLFTest2') IS NOT NULL

BEGIN

DROP DATABASE VLFTest2;

END;

GO

CREATE DATABASE VLFTest2

ON (

NAME = 'VLFTest2_Data',

FILENAME = 'C:\Program Files\Microsoft SQL Server\MSSQL11.MSSQLSERVER\MSSQL\DATA\VLFTest2_Data.mdf')

LOG ON (

NAME = 'VLFTest2_Log',

FILENAME = 'C:\Program Files\Microsoft SQL Server\MSSQL11.MSSQLSERVER\MSSQL\DATA\VLFTest2_Log.ldf',

SIZE = 2560512 KB,

MAXSIZE = 10240 MB,

FILEGROWTH = 1024 KB);

GO

It is basically the same database, but instead of growing the transaction log file from 512 KB to 2,560,512 KB by 512 KB increment (and in the process created 10,002 VLFs), I immediately create the transaction log with size 2,560,512 KB. By doing this, I only have 16 VLFs. Now when I try to run the following query:

USE master;

ALTER DATABASE VLFTest2 SET OFFLINE;

GO

SET STATISTICS TIME ON;

ALTER DATABASE VLFTest2 SET ONLINE;

GO

After running it 5 times, on average it took about 0.2 seconds to bring VLFTest2 database online. That’s quite a huge difference, 23 seconds vs. 0.2 seconds (about 2,200% difference).

I also noticed big difference in the time that it took to restore VLFTest1 database (with 10,002 VLFs) compared to the VLFTest2 database (with 16 VLFs). It took about 1 minutes and 35 seconds to restore VLFTest1 database, but it only took about 6 seconds to restore VLFTest2.

In addition to the above, having an excessive number of VLFs in your database can also have an impact on the database transaction. A while ago, Linchi Shea wrote a blog post regarding this. It can be found here.

Take Away

Having a high count of VLFs can hurt your database. To avoid it, we need to carefully plan our database size, especially in this case, the transaction log size. We also need to make sure that the transaction file growth size is not too small. This is to ensure that we don’t end up with many auto growth on the transaction log file, which cause the VLFs to grow.

Further Reading

You might also want to read Kimberly Tripp’s blog post regarding VLF. It can be found here.

Thursday, February 6, 2014

Utilizing smtp4dev with SQL Server Database Mail for Testing

SQL Server has database mail, a solution which would allow us to send e-mail from our database server. It allows us to send either e-mail in html or text format to users. It’s great because we can use it to send query results to users (or ourselves). We can also configure SQL Server Agent to use database mail to send alerts to operator.

Database mail utilizes SMTP server to send the e-mails. In some cases, we might not have a readily available SMTP server in our environment (like in my home lab environment) or we might be block from using the SMTP server from our development environment for security reason. In those situations, I’ve been using smtp4dev. It is a neat little utility that would allow you to have a dummy SMTP server on your local computer. It is lightweight and also no installation is needed. When we run the utility, it would sit on the system tray and listen to port 25 (default SMTP port). It will receive the e-mail that get send to its way, without sending the e-mail to its final destination (the e-mail recipients). It is pretty handy when we need to run some tests.

Setting up database mail to work with smtp4dev is pretty simple.

- Download smtp4dev from http://smtp4dev.codeplex.com.

- Extract the file and save it on your system (assuming that it is your development system and it has the SQL Server).

- Run smtp4dev. Notes: If you have Windows Firewall turned on, you might get a “Windows Security Alert” that ask you if you want to allow smtp4dev to communicate with either the private network, public network or both. Since normally I run smtp4dev on my development system with also has SQL Server that I want to have database mail turn on, I would just hit the cancel button.

- If database mail in SQL Server has not been enable, we can enable it by using the following SQL script:

exec sp_configure 'show advanced', 1;

go

reconfigure;

go

exec sp_configure 'Database Mail XPs', 1;

go

reconfigure;

go

- We then need to set up database mail profile, account and then associate the profile with the account in SQL Server. To do that, we can use the following script (based on the “Database Mail Simple Configuration Template”):

DECLARE @profile_name sysname,

@account_name sysname,

@SMTP_servername sysname,

@email_address NVARCHAR(128),

@display_name NVARCHAR(128),

@error_display NVARCHAR(500);

-- Profile name. Replace with the name for your profile

SET @profile_name = 'SMTP4Dev';

-- Account information. Replace with the information for your account.

SET @account_name = 'SMTP4Dev Account';

SET @SMTP_servername = 'localhost'; --- Since the smtp4dev is on local system.

SET @email_address = 'john.doe@email.com';

SET @display_name = 'John Doe';

-- Verify the specified account and profile do not already exist.

IF EXISTS (SELECT * FROM msdb.dbo.sysmail_profile WHERE name = @profile_name)

BEGIN

SET @error_display = 'The specified Database Mail profile (' + @profile_name + ') already exists.';

RAISERROR(@error_display, 16, 1);

GOTO done;

END;

IF EXISTS (SELECT * FROM msdb.dbo.sysmail_account WHERE name = @account_name )

BEGIN

SET @error_display = 'The specified Database Mail account (' + @account_name + ') already exists.';

RAISERROR(@error_display, 16, 1) ;

GOTO done;

END;

-- Start a transaction before adding the account and the profile

BEGIN TRANSACTION ;

DECLARE @rv INT;

-- Add the account

EXECUTE @rv=msdb.dbo.sysmail_add_account_sp

@account_name = @account_name,

@email_address = @email_address,

@display_name = @display_name,

@mailserver_name = @SMTP_servername;

IF @rv<>0

BEGIN

SET @error_display = 'Failed to create the specified Database Mail account (' + @account_name + ').';

RAISERROR(@error_display, 16, 1) ;

GOTO done;

END

-- Add the profile

EXECUTE @rv=msdb.dbo.sysmail_add_profile_sp

@profile_name = @profile_name ;

IF @rv<>0

BEGIN

SET @error_display = 'Failed to create the specified Database Mail profile (' + @profile_name + ').';

RAISERROR(@error_display, 16, 1);

ROLLBACK TRANSACTION;

GOTO done;

END;

-- Associate the account with the profile.

EXECUTE @rv=msdb.dbo.sysmail_add_profileaccount_sp

@profile_name = @profile_name,

@account_name = @account_name,

@sequence_number = 1 ;

IF @rv<>0

BEGIN

SET @error_display = 'Failed to associate the speficied profile with the specified account (' + @account_name + ').';

RAISERROR(@error_display, 16, 1) ;

ROLLBACK TRANSACTION;

GOTO done;

END;

COMMIT TRANSACTION;

done:

GO

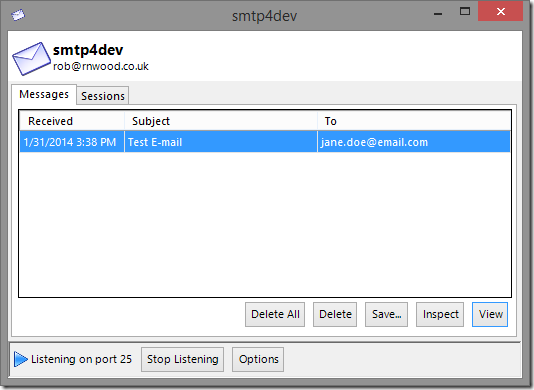

- Now the moment of truth, we can test to see if it works by trying to send an e-mail using sp_send_dbmail

exec msdb..sp_send_dbmail @profile_name = 'SMTP4Dev',

@recipients = 'jane.doe@email.com',

@subject = 'Test E-mail',

@body = 'This is a test email.';

If everything works as plan hopefully on your tray notification, you would see the following message:

And if you open smtp4dev, you should see the following:

To view the e-mail, you can click on the “View” or “Inspect” button.

Some caveats that I’ve noticed:

- I have Microsoft Office 2013 installed on my system, which includes Outlook 2013. For some reason, when I click the “View” button, I would get an error message “The attempted operation failed. An object could not be found.” and it just would not open the e-mail (if I try to open it using Outlook 2013). It is ok if I use Windows Live Mail 2012. I would normally use the “Inspect” button instead.

- If you exit out smtp4dev, the e-mails that you have on the Messages tab will get deleted.

Cleaning Up

If you want to remove the database mail from your SQL Server, first you want to remove the profile association with account, the profile and the account. You can do that by using the following script:

DECLARE @profile_name sysname,

@account_name sysname,

@error_display nvarchar(500);

-- Profile name. Replace with the name for your profile

SET @profile_name = 'SMTP4Dev';

-- Account information. Replace with the information for your account.

SET @account_name = 'SMTP4Dev Account';

IF NOT EXISTS (SELECT * FROM msdb.dbo.sysmail_profile WHERE name = @profile_name)

BEGIN

SET @error_display = 'The specified Database Mail profile (' + @profile_name + ') does not exists.';

RAISERROR(@error_display, 16, 1);

GOTO done;

END

IF NOT EXISTS (SELECT * FROM msdb.dbo.sysmail_account WHERE name = @account_name)

BEGIN

SET @error_display = 'The specified Database Mail account (' + @account_name + ') does not exists.';

RAISERROR(@error_display, 16, 1);

GOTO done;

END

-- Start a transaction before deleting the profile account, profile and account.

BEGIN TRANSACTION ;

DECLARE @rv INT;

EXEC @rv=msdb.dbo.sysmail_delete_profileaccount_sp

@profile_name = @profile_name,

@account_name = @account_name;

IF @rv<>0

BEGIN

SET @error_display = 'Failed to delete the profile with the specified account (' + @account_name + ').';

RAISERROR(@error_display, 16, 1) ;

ROLLBACK TRANSACTION;

GOTO done;

END;

EXEC @rv=msdb.dbo.sysmail_delete_profile_sp

@profile_name = @profile_name;

IF @rv<>0

BEGIN

SET @error_display = 'Failed to delete the database mail profile (' + @profile_name + ').';

RAISERROR(@error_display, 16, 1) ;

ROLLBACK TRANSACTION;

GOTO done;

END;

EXEC @rv=msdb.dbo.sysmail_delete_account_sp

@account_name = @account_name;

IF @rv<>0

BEGIN

SET @error_display = 'Failed to delete the database mail account (' + @account_name + ').';

RAISERROR(@error_display, 16, 1) ;

ROLLBACK TRANSACTION;

GOTO done;

END;

COMMIT TRANSACTION;

done:

GO

Then after that, you can disabled the database mail by using the following script:

exec sp_configure 'Database Mail XPs', 0;

go

reconfigure;

go

exec sp_configure 'show advanced', 0

go

reconfigure;

go

Monday, January 27, 2014

Unique Filtered Index

Consider a scenario where you need to create a table in which you need to store contact information, and for one of the columns, we need to store passport number for each contact, if one is available. Assuming that passport number is unique for each contact, but not all have passport, how can we ensure the uniqueness for the passport number enter while allowing null values for those contacts who don't have passport number?

Unique constraint/index would come to mind. However, the problem of using unique constraint/index in this scenario is while unique constraint/index would ensure that the data enter is unique, a column with unique constraint/index can only allow one null value in that column.

A quick demo for this using unique index (but the result should be similar if we use unique constraint as well):

IF (OBJECT_ID('dbo.ContactInfo') IS NOT NULL)

BEGIN

DROP TABLE dbo.ContactInfo

END

CREATE TABLE dbo.ContactInfo (

ContactID INT PRIMARY KEY IDENTITY (1,1),

ContactName VARCHAR(100) NOT NULL,

PassportNumber VARCHAR(25) NULL);

CREATE UNIQUE INDEX UI_PassportNumber

ON dbo.ContactInfo(PassportNumber)

Now let's try to test to make sure that we can't enter duplicate passport number:

INSERT INTO dbo.ContactInfo (ContactName, PassportNumber) VALUES ('John Doe', 'B1234567890');

INSERT INTO dbo.ContactInfo (ContactName, PassportNumber) VALUES ('Jane Doe', 'B1234567890');

We would get the following messages:

(1 row(s) affected)

Msg 2601, Level 14, State 1, Line 2

Cannot insert duplicate key row in object 'dbo.ContactInfo' with unique index 'UI_PassportNumber'. The duplicate key value is (B1234567890).

The statement has been terminated.

Which is to be expected, it would insert the information for John Doe just fine, but then it would failed to insert the information for Jane Doe since Jane Doe’s passport number is the same as John Doe’s.

Now let’s test to make sure that we can enter multiple contact info with blank passport number.

INSERT INTO dbo.ContactInfo (ContactName, PassportNumber) VALUES ('Mary Doe', NULL);

INSERT INTO dbo.ContactInfo (ContactName, PassportNumber) VALUES ('Jack Doe', NULL);

We would get the following messages:

(1 row(s) affected)

Msg 2601, Level 14, State 1, Line 2

Cannot insert duplicate key row in object 'dbo.ContactInfo' with unique index 'UI_PassportNumber'. The duplicate key value is (<NULL>).

The statement has been terminated.

It would insert the contact info for Marry Doe just fine, but it would fail when trying to insert the contact info for Jack Doe. This is because the unique constraint can allow only one null value.

Possible Solution

So how we can ensure that our passport column can accept null values while ensure uniqueness for the non-null value? With SQL Server 2008 and above, one possibility is by using the unique filtered index. A quick demo for this one:

IF (OBJECT_ID('dbo.ContactInfo') IS NOT NULL)

BEGIN

DROP TABLE dbo.ContactInfo

END

CREATE TABLE dbo.ContactInfo (

ContactID INT PRIMARY KEY IDENTITY (1,1),

ContactName VARCHAR(100) NOT NULL,

PassportNumber VARCHAR(25) NULL);

CREATE UNIQUE INDEX UI_PassportNumber

ON dbo.ContactInfo(PassportNumber)

WHERE PassportNumber IS NOT NULL

Let’s try to see what happen if we try to enter contact info with duplicate passport number:

INSERT INTO dbo.ContactInfo (ContactName, PassportNumber) VALUES ('John Doe', 'B1234567890');

INSERT INTO dbo.ContactInfo (ContactName, PassportNumber) VALUES ('Jane Doe', 'B1234567890');

We would get the following messages:

(1 row(s) affected)

Msg 2601, Level 14, State 1, Line 2

Cannot insert duplicate key row in object 'dbo.ContactInfo' with unique index 'UI_PassportNumber'. The duplicate key value is (B1234567890).

The statement has been terminated.

That works as expected. Only the record for John Doe that got inserted.

Now let’s try to see what happen if we try to enter multiple contact info with blank passport number:

INSERT INTO dbo.ContactInfo (ContactName, PassportNumber) VALUES ('Mary Doe', NULL);

INSERT INTO dbo.ContactInfo (ContactName, PassportNumber) VALUES ('Jack Doe', NULL);

We would get the following messages:

(1 row(s) affected)

(1 row(s) affected)

Both records are being inserted just fine, which what we are expecting.

Conclusion

We can use unique filtered index as a way to enforce uniqueness on filtered data. In the example above, it is to enforce uniqueness on a not null value on the passport number column. There is one caveat. Unlike unique constraint/index where it can be referenced by a foreign key constraint, unique filtered index can’t be referenced by a foreign key constraint.

So if we continue on from the above examples and try to create a new table named PassportVisit with the following definition:

IF (OBJECT_ID('dbo.PassportVisit') IS NOT NULL)

BEGIN

DROP TABLE dbo.PassportVisit

END

CREATE TABLE dbo.PassportVisit (

PassportNumber VARCHAR(25) FOREIGN KEY REFERENCES dbo.ContactInfo(PassportNumber),

DateofVisit DateTime,

CountryofVisit VARCHAR(50))

So we are trying to reference the passport number column on the passport visit table to the passport number on the contact info table. This will be successful if we have a unique constraint/index. But it will fail if we have unique filtered index. It will fail with the following messages:

Msg 1776, Level 16, State 0, Line 6

There are no primary or candidate keys in the referenced table 'dbo.ContactInfo' that match the referencing column list in the foreign key 'FK__PassportV__Passp__73B0DA39'.

Msg 1750, Level 16, State 0, Line 6

Could not create constraint. See previous errors.

Sunday, June 9, 2013

List SSAS User Roles Using PowerShell (Part 2)

A while ago, I have a blog post in regards to using PowerShell to list user roles and also their members. The blog post can be found here. I’ve gotten a few questions about expanding the PowerShell script to:

- List user roles and their members for all databases within a particular SQL Server Analysis Services (SSAS) server.

- Output the result into one table

Here’s a PowerShell script that can be use to accomplish those goals

[Reflection.Assembly]::LoadWithPartialName("Microsoft.AnalysisServices")

# SSAS server name variable

$SSASServerName = "ServerName"

# Try to connect to the SSAS server

$SSASServer = New-Object Microsoft.AnalysisServices.Server

$SSASServer.Connect($SSASServerName)

# Object to store the result

$Result = @()

# Get the SSAS databases and loop thru each of them

foreach ($DB in $SSASServer.Databases)

{

# Get the SSAS database

$SSASDatabase = $SSASServer.Databases.Item($DB.name)

# Get the roles available within the SSAS database and loop thru each of them

foreach ($Role in $SSASDatabase.Roles)

{

# Get the members within the role and loop thru each one of them

foreach ($UserName in $Role.Members)

{

# Create a new object that would store the database name, role name and member user name

$ItemResult = New-Object System.Object

$ItemResult | Add-Member -type NoteProperty -name DatabaseName -value $DB.Name

$ItemResult | Add-Member -type NoteProperty -name RoleName -value $Role.Name

$ItemResult | Add-Member -type NoteProperty -name UserName -value $UserName.Name

# Put the item result and append it to the result object

$Result +=$ItemResult

}

}

}

$Result | Select DatabaseName, RoleName, UserName | format-table -auto -wrap | Out-String

Thursday, June 6, 2013

List SQL Server Analysis Services Database Properties Using PowerShell

If you have SQL Server Analysis Services (SSAS) and want to list the SSAS databases using PowerShell, here’s a PowerShell script that can do that:

[Reflection.Assembly]::LoadWithPartialName("Microsoft.AnalysisServices")

# Variable for your SSAS server name

$SSASServerName = "ServerName"

# Connect to the SSAS server

$SSASServer = New-Object Microsoft.AnalysisServices.Server

$SSASServer.Connect($SSASServerName)

# Try to list the SSAS database Name

$SSASServer.Databases | Select NameIt turns out that you can do much more than listing your SSAS database name with this. You can get the SSAS database collation, compatibility level, created date, last processed, etc. To get the full list of properties that are available to you, try to run the following PowerShell script:

[Reflection.Assembly]::LoadWithPartialName("Microsoft.AnalysisServices")

# Variable for your SSAS server name

$SSASServerName = "ServerName"

# Connect to the SSAS server

$SSASServer = New-Object Microsoft.AnalysisServices.Server

$SSASServer.Connect($SSASServerName)

# Try to list the SSAS database properties that are available to you

$SSASServer.Databases | Get-Member -MemberType property | FT -wrap | Out-StringSo now, if I want to get a list of SSAS database name, collation, compatibility level, created data and last processed date, I can run the following PowerShell script:

[Reflection.Assembly]::LoadWithPartialName("Microsoft.AnalysisServices")

# Variable for your SSAS server name

$SSASServerName = "ServerName"

# Connect to the SSAS server

$SSASServer = New-Object Microsoft.AnalysisServices.Server

$SSASServer.Connect($SSASServerName)

# Try to list the SSAS Database Name, Collation, Compatibility Level, Created Time, Estimated Size, Last Processed Date

$SSASServer.Databases | Select Name, Collation, CompatibilityLevel, CreatedTimeStamp, EstimatedSize, LastProcessed | FT -wrap | Out-StringRelated Blog Post:

Friday, May 24, 2013

Ubuntu + VirtualBox 4.2.12 Guest Additions = Low-Graphic Mode Error

I am using VirtualBox to run several test virtual machines. For most part, it has been pretty good. It’s lightweight and relatively easy and simple to use. Recently, I’ve upgraded VirtualBox to version 4.2.12. The upgrade process itself went well.

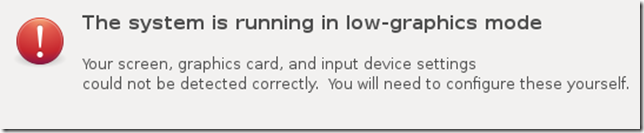

Then I started to update the guest additions on each of the virtual machines. Everything seems to be good… until it’s time to update the guest additions on Ubuntu virtual machine (I have Ubuntu 12.04 LTS). When I installed the guest additions, it seems to went well, it uninstall the previous version and install the guest additions for version 4.2.12. But then after I reboot that virtual machine, I got the following error message/ warning:

The system is running in low-grahics mode

Your screen, graphic card, and input device settings could not be detected correctly. You will need to configure these yourself.

I could not even get into the logon screen. Interesting …

Researching around the internet, it looks like there is a problem with Ubuntu and VirtualBox 4.2.12 guest additions. So I decided to try to revert back the guest addition to VirtualBox 4.2.10 guest additions. I was able to get the ISO for VirtualBox 4.2.10 guest additions from here.

Then I do the following:

- Start the Ubuntu virtual machine

- When I get “The system is running in low-graphics mode” error/warning, I press the CRTL-ALT-F1 to enter the tty1

- In VirtualBox menu for that Ubuntu system, I mount the VirtualBox 4.2.10 guest additions ISO that I have downloaded earlier. I just went to Devices – CD/DVD Devices – Choose a virtual CD/DVD disk file. Then select the ISO file.

- After login in tty1, I ran the command below. It would mount the cd and then run the VirtualBox 4.2.10 guest additions for Linux. It would uninstall the VirtualBox 4.2.12 guest additions and install the VirtualBox 4.2.10 guest additions.

sudo mount /dev/cdrom /cdrom

cd cdrom

sudo sh VBoxLinuxAdditions.run - Then once it’s done, I reboot the system by running the following command:

sudo reboot

Once I did those, the Ubuntu virtual machine would boot fine.

I also have tried a fresh install of Ubuntu (creating a new virtual machine in VirtualBox 4.2.12). It was fine before I installed the guest additions on it... well I can only get 1024x768 as the max screen resolution, but I can access the Unity desktop. However, after I installed the VirtualBox 4.2.12 guest additions and reboot the system, I got the same error/warning message. Installing the VirtualBox 4.2.10 guest additions seems to be ok.